You came to make better product choices. Good. This guide shows you how to use Mixpanel ab testing to find clear wins. In the next few minutes, you will learn the nine KPIs that cut noise and turn data into simple, strong answers. You will see what each KPI means, how to track it, and how to read it. You will also get steps to build easy dashboards and keep your data clean.

Why does this matter? Many teams run tests but still feel lost. Numbers fight each other. Charts look busy. Goals keep moving. With the right KPIs, mixpanel ab testing turns into a calm, steady system. It shows what to keep, what to fix, and what to stop. It saves time and boosts growth.

Who is this for? If you build products, write code, plan growth, or lead a small team, this guide is for you. By the end, you will know which KPIs to track, how to set them up, and how to make choices with confidence. You will have a simple playbook for mixpanel ab testing, ready to use today.

How Mixpanel ab testing works

Before we dive into KPIs, let’s set the stage. Mixpanel tracks what people do in your app or site. It uses events (things users do), properties (details about those events), and cohorts (groups of users who share traits). In mixpanel ab testing, you show two or more versions to users. You then compare results to find the better version.

- Events: clicks, signups, purchases, views.

- Properties: plan type, device, country, price.

- Cohorts: new users this week, users on iOS, high spenders.

You will pick primary and secondary metrics. The primary metric is the one big goal for the test. It could be signup conversion or revenue per user. Secondary metrics check for side effects. Think of them as guardrails. They warn you if a test hurts load time, churn, or key actions.

How long should you run a test? Long enough to get a fair sample size. Short tests can lie. Very long tests can drift. Use your past traffic and baseline rates to pick a length. Keep the split even. Do not peek too early. And remember, mixpanel ab testing works best when you plan your metrics before you launch.

Tip: A/B tests answer what works. For finding problems in flows or screens, add usability testing to your research mix. It helps you see why users struggle before you run another test.

Framework: selecting KPIs for mixpanel ab testing

Pick KPIs that match your growth goals. Map each KPI to one of four stages:

- Acquisition: Are we turning visitors into signups?

- Activation: Do new users get value fast?

- Retention: Do users come back again?

- Revenue: Do users pay and keep paying?

Keep one North Star metric for the big picture. It could be weekly active users or successful orders. Then add a small set of supporting KPIs. Keep them stable across tests to avoid metric drift. If you keep changing your targets, your team will chase noise.

Use two types of KPIs:

- Directional KPIs tell you if you are moving the right way. They are fast and early, like click-through or add-to-cart.

- Definitive KPIs prove impact, like purchased, retained at 30 days, or revenue per user.

Balance both. Directional KPIs give quick reads. Definitive KPIs give truth. In some cases, you may compare tools, like Amplitude ab testing, but keep your main process simple and consistent. For most teams, one clear source of truth helps you move faster with mixpanel ab testing.

The 9 crucial KPIs mixpanel ab testing should track

1. Conversion rate by variant in mixpanel ab testing

Definition and use: Conversion rate is the share of users who take your key action. It might be signup, complete checkout, or start a trial. This is the most common primary metric in mixpanel ab testing.

Typical baselines: Many funnels convert between 1% and 20%, but your baseline depends on your product and traffic. Compare each variant’s rate to the control.

How to build the report:

- Create an event for the key action (e.g., Signed Up).

- Add a property for experiment name and variant.

- Use a funnel report with breakdown by variant.

Reading lifts and avoiding peeks:

- Focus on the final step, not just clicks.

- Wait for enough data to reduce random swings.

- Do not stop the test on a single-day spike.

2. Experiment reach and exposure in mixpanel ab testing

Why it matters: If users never see the change, your test cannot win. Reach is the count of eligible users. Exposure is how many of them actually got a variant.

Build cohorts:

- Eligible cohort: users who meet the rules for the test.

- Exposed cohort: users who actually saw variant A or B.

Fix uneven splits:

- Check for traffic imbalances by device, country, or channel.

- If one variant gets more mobile users, your read may be off.

- Adjust your flag or routing rules to keep splits fair.

Track exposure bias: Some users may switch states (logged in vs. logged out) and miss the test. Use guardrails to spot this early in mixpanel ab testing.

3. Activation rate for mixpanel ab testing

What is activation? It is the moment a new user gets first value. Examples: create first project, send first message, or import first file. Activation shows if your onboarding works.

How to track:

- Define the activation event and a time window (like first 7 days).

- Build a funnel from Signup to Activation with a variant breakdown.

- Segment by channel or device to see where drop-offs are highest.

Tips:

- Use clear, simple steps in onboarding.

- Shorten time to first key action.

- Use tooltips and checklists to guide new users.

Cohort views help you see differences by source or region. That way, mixpanel ab testing shows which variant helps each group most.

4. Retention by cohort in mixpanel ab testing

Retention shows if users come back. If they do not return, your test win may fade. Track N-day retention (like day 1, day 7, day 30), unbounded retention (ever came back), or rolling retention (came back within a period).

Build the report:

- Choose a starting event, like First Open or Signup.

- Use the Retention report and break down by variant.

- Add segments for plan type, device, or country.

Read the curve:

- A fast drop on day 1 means poor onboarding or weak value.

- Flat lines show stable use. Upward bumps can mean weekly use patterns.

- Seasonality matters: weekends and holidays can shift numbers.

Retention is a strong secondary KPI in mixpanel ab testing, and sometimes a primary KPI for products with slow payoffs.

5. Completion rate for the core funnel in mixpanel ab testing

Your core funnel is the set of steps to your main goal: browse, add to cart, checkout, pay. Completion rate shows how many get from start to finish.

Identify drops:

- Use Funnel and Path Analysis to see where users leave.

- Check page load times and errors at each step.

- Look for copy confusion, extra fields, or bad mobile layouts.

Prioritize fixes:

- Solve the biggest drop first.

- Ship small, clear changes and re-test.

- Track completion rate as a primary KPI when the funnel is your core business path.

6. Time to value in mixpanel ab testing

Time to value is how fast a user gets a clear win. Shorter is better. If users see value fast, they stay.

Measure it:

- Start at first visit or signup.

- End at your key action (e.g., Created First Project).

- Chart the distribution. Look at the median and the long tail.

Handle outliers:

- Very long times can hide the real story.

- Segment by plan, device, or channel.

- Spot power users who reach value in minutes and learn from them.

Use properties to tag steps that speed up value. In mixpanel ab testing, a variant that cuts time to value often increases activation and retention too.

7. Revenue or monetization impact in mixpanel ab testing

Money matters. Watch revenue KPIs like ARPU (average revenue per user), ARPPU (average revenue per paying user), and total revenue events.

Attribution windows:

- Not all revenue lands the same day. Pick a fair window (7–30 days).

- For trials, revenue may lag even more.

Guardrails:

- Track churn rate, refund rate, and support tickets.

- Make sure a short-term revenue bump does not hurt long-term health.

- Use revenue as a secondary KPI when the test goal is upstream, like engagement, but keep eyes on it.

8. Experiment cadence and velocity in mixpanel ab testing

A single test is not a program. Track your testing engine too.

Key metrics:

- Tests per month.

- Win rate (share of tests that ship).

- Average uplift (how much winners move the needle).

Build a dashboard:

- Show open tests, stage, and ETA.

- Chart wins by area: onboarding, pricing, feature use.

- Surface learnings, not just numbers.

Balance test types:

- Exploratory tests find new ideas.

- Iterative tests smooth known flows.

- Healthy mix keeps growth steady and learning fast.

9. Statistical confidence and significance in mixpanel ab testing

You need enough data to trust your result. Plan your minimum detectable effect (the smallest change you care about) and power (chance to see a true effect).

Interpret confidence:

- A “win” should be strong enough to repeat.

- Variance can be high for small groups; segment with care.

- Avoid early peeks. They inflate false wins.

When to stop:

- Stop when you hit your sample size and the effect is clear.

- Pause if guardrails show harm.

- Rerun if results are unclear or if traffic changed a lot mid-test.

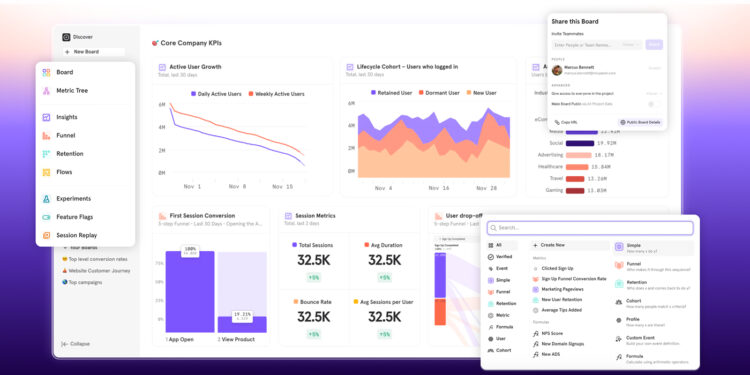

Implementation: setting up reports for mixpanel ab testing

Make setup simple and clean. Good setup makes good data.

Event naming and taxonomy checklist:

- Name events with verbs: Signed Up, Added to Cart, Purchased.

- Add clear properties: plan, price, device, source, experiment name, variant.

- Keep a shared tracking plan and a changelog.

Standard reports to create:

- Funnel with variant breakdown (for conversion and completion).

- Retention by cohort and variant.

- Insights report for time to value.

- Revenue dashboard with ARPU and ARPPU.

- Exposure report for reach and split quality.

Helpful extras:

- Build saved cohorts for eligible users, exposed users, and new users this week.

- Create a QA dashboard to check events fire as expected.

- Alongside mixpanel ab testing, run small observation studies or Ux testing to learn the “why” behind the numbers.

- If you need quick setups for surveys or recordings, make a shortlist of ux testing tools that match your stack.

If your team needs outside help to review your plan, consider light ux audit services to catch tracking gaps early. A small check now avoids big headaches later.

Data quality: ensuring reliable mixpanel ab testing

Bad data breaks trust. Keep it clean with simple routines.

Governance and QA:

- Keep a single owner for the tracking plan.

- Review names, properties, and definitions every month.

- Test key flows in staging and production before you launch a test.

Tackle messy data:

- Filter bots and internal traffic.

- Deduplicate events if retries or double submits occur.

- Use identity management to merge users across web and mobile.

Backfill and versioning:

- When you rename events, map old to new so reports keep working.

- Version your experiments. Each new test should have a new name or tag.

- Keep start and end dates in properties for clarity.

If you need to dig deep into flows, run a targeted ux audit to see where users get stuck and how it affects test reads. Clean flows help mixpanel ab testing show real impact.

Decision-making: reading results and acting fast

Turn results into action with a simple playbook.

Set thresholds:

- Ship when the primary metric wins with strong confidence and guardrails look safe.

- Iterate when results are close and you have ideas to improve.

- Roll back when the variant harms key guardrails, even if clicks look good.

Trade-offs:

- A test may boost clicks but hurt retention. Balance short-term wins with long-term health.

- If a test helps a small, high-value cohort, it can still be a win. Segment before you judge.

Document and learn:

- Write a short summary: hypothesis, setup, KPIs, result, next step.

- Add screenshots of key reports.

- Store learnings in one place so the team does not repeat old tests.

If your team lacks in-house bandwidth to run research between tests, consider ux testing services for short, focused studies that answer the “why” behind the numbers. This keeps your mixpanel ab testing program fast and human-centered.

Advanced tactics for mixpanel ab testing

Once the basics hum, try these advanced moves.

Segmented effects and interactions:

- Check if a variant helps new users but not power users.

- Look for device, country, or plan differences.

- Guard against over-segmentation. Keep groups large enough to trust.

Sequential testing and bandits:

- Sequential tests let you check data at set times with less risk of false wins.

- Multi-armed bandits shift more traffic to winners while the test runs.

- Use these when you need faster decisions and have stable goals.

Feature flags, rollouts, and holdouts:

- Use flags to ship safe and fast.

- Do a slow rollout: 1%, 10%, 25%, and so on.

- Keep a small holdout group to measure long-term lift over weeks. This helps confirm that your mixpanel ab testing win holds up in the wild.

Case study: from noisy tests to clear wins

Context:

A small SaaS tool had flat growth. Signups were steady, but few users became active. The team ran many tests but saw mixed results. They chose to reset with a clear mixpanel ab testing plan.

Hypothesis and KPIs:

They believed a simpler first project flow would boost activation and 30-day retention. Primary KPI: Activation within 7 days. Secondary: Day-30 retention, time to value, and ARPU guardrail.

Setup:

- Events: Signed Up, Created Project, Invited Teammate, Purchased.

- Properties: experiment name, variant, plan, device.

- Cohorts: new users by week, exposed users, high-intent traffic.

Results:

- Variant B cut time to value from 2 days to 8 hours.

- Activation rose from 28% to 37%.

- Day-30 retention went from 18% to 23%.

- ARPU stayed flat, so there was no revenue risk.

Lessons:

- A clear activation target beats a vague “engagement” metric.

- Short, simple onboarding steps help more than flashy UI changes.

- One dashboard for the whole team speeds decisions.

Playbook to reuse:

- Start with clear activation and retention KPIs.

- Track exposure and splits daily.

- Ship, learn, and log every test.

As they improved onboarding copy and steps, they also used outside ux design services for a quick polish on forms and empty states. The best part: their wins stacked, and the team felt calm and focused.

Common pitfalls to avoid in mixpanel ab testing

Metric shopping and p-hacking:

- Do not change your primary KPI after you see results.

- Do not test dozens of slices just to find one that “wins.”

Peeking early and stopping on spikes:

- Early peeks inflate false wins.

- Keep your planned run time unless a guardrail fires.

Misaligned cohorts and changing definitions:

- If your cohort rules move, your data moves too.

- Lock your definitions before the test starts.

One more watch-out: keep your tracking simple. A crowded dashboard makes it easy to miss the real story in mixpanel ab testing.

FAQs about mixpanel ab testing

How long should a test run?

- Long enough to reach your planned sample size and the minimum effect you care about. For many teams, this is 1–2 weeks for high-traffic flows and longer for low-traffic ones. Do not stop on the first “good day.”

What if KPIs disagree?

- Go back to your plan. The primary KPI wins the call. Check guardrails for harm. If the result is close, look at segments you planned in advance. If still unclear, rerun with a tighter scope.

How to handle low traffic or long cycles?

- Use bigger effects (fewer, bolder changes) so you can see a clear signal.

- Batch ideas into themed releases.

- Extend the test window, or switch to directional KPIs first, then confirm with a definitive KPI later. Keep your mixpanel ab testing plan steady so you can compare tests over time.

Resources and next steps

KPI checklist:

- Primary KPI tied to your goal (conversion, activation, retention, revenue).

- 2–3 guardrail KPIs (performance, churn, support tickets).

- Exposure and split checks.

- Clear stop rules and sample size plan.

Dashboard starter list:

- Funnel with variant breakdown for conversion and completion.

- Retention by cohort and variant.

- Insights chart for time to value.

- Revenue view with ARPU and ARPPU.

- Program dashboard for cadence, win rate, average uplift.

Templates to copy:

- Experiment brief: hypothesis, setup, KPIs, timelines, risks.

- Results summary: charts, learnings, next step.

- Change log: events, properties, and flag changes.

If your next test touches your UI, and you need extra help, you might choose to hire ui ux designers to speed up design and implementation while your team keeps focus on data and rollout. Keep your toolchain simple, keep your plan clear, and keep your mixpanel ab testing loop tight.

Conclusion

Ready to turn noise into wins? Pick one key flow, set a clear primary KPI, and launch your next mixpanel ab testing run this week. Use the nine KPIs to track what matters, watch your guardrails, and ship with confidence. Start small today, learn fast, and grow steady, your users, your team, and your bottom line will thank you.