Why AB split test drives faster revenue gains

Guessing is risky. An ab split test makes choices clear. It shows what wins and what loses. With it, you stop guessing and start using proof. This keeps money safe. It also helps your site grow fast. In the first week, you can spot small wins. Over a month, those wins can stack.

Here is the big idea. An ab split test compares two versions of a page or part. The “A” version is the current one. The “B” version is the change. Visitors see one or the other. You then track who buys, signs up, or clicks. The better version stays. The weaker one goes.

This process reduces risk and raises ROI. It does so because every change must earn its keep. You do not ship a full redesign and hope. You ship a small change and measure. In this guide, you will learn the steps to plan, run, and grow with ab split test work. You will see what to test, how to measure, and how to roll out wins with care.

AB split test fundamentals

What it is and when to use it

An ab split test is a simple head-to-head race. Show half of users the control (A). Show the other half a variant (B). Count conversions. A conversion can be a sale, a lead, a click, or a view. Over a set time, you learn which version works better.

Use an ab split test when you have one clear change to try. Examples:

- A new headline on a landing page

- A shorter form on checkout

- A different price layout on your pricing page

Run an ab split test for parts that matter to money. If the page can change sales, it is a good place to test. This includes product pages, carts, and key buttons. When you have data, you can move fast and avoid costly mistakes.

Define your test cycle. A cycle has four steps:

- Pick the goal, like “more adds to cart.”

- Make a small change tied to that goal.

- Run the test for enough time and traffic.

- Choose the winner and log the learning.

When an AB split test beats redesigns or opinion-led changes

A full redesign takes months. It can break what already works. Opinions also vary. The CEO may like one color. The designer may like another. An ab split test turns debate into clear numbers. You learn what customers choose, not what people guess. That is why an ab split test often beats a big redesign or a decision made in a meeting.

When not to run an AB split test

Do not test if traffic is too low. If few people visit, results will be noisy. Do not test on a page still in heavy flux. Wait until it is stable. Watch out for holiday spikes or odd events. Seasonal swings can bend results. In those times, pause or run longer so the data is fair.

Set revenue-first goals and hypotheses

Map tests to business outcomes

Tie each test to money. Good core metrics include:

- Conversion rate (how many visitors take the key action)

- Revenue (total money made)

- AOV (average order value, or money per order)

- RPV (revenue per visitor, or money per visit)

- Lead quality (leads that turn into paying users)

If your ab split test boosts clicks but lowers AOV, that may not help. Watch the full picture. Check both the rate and the value.

Craft strong hypotheses

A strong hypothesis is a simple plan with a reason. Use this shape:

- Problem: What blocks the user?

- Change: What will you change to fix it?

- Impact: What result do you expect and why?

- Metric: What will you measure?

Example: “Users fear fees at checkout. If we show shipping early, we expect more completes, raising conversion rate by 10%, measured by orders per visitor.” This keeps your ab split test focused and easy to judge.

Prioritize what to test

Frameworks that focus on sales impact

You will have many ideas. Pick the ones that help sales first. Use a simple score like ICE or PIE:

- Impact: How much lift could this make?

- Confidence: How sure are you it will work?

- Effort: How hard is it to ship?

Score each idea from 1 to 5. Test high-score items first, especially on high-intent pages like cart and checkout. Tie each idea to a money metric so your ab split test stays laser focused.

Build a balanced roadmap

Plan quick wins and big bets. Quick wins are small, fast changes with a good chance to lift results. Big bets are deeper ideas that may take longer but can unlock new growth. Balance both. Set a steady test cadence, like one to three tests per week. Keep a small buffer for surprises so your program never stalls.

Measurement plan and analytics setup

Track the right events

Before you run an ab split test, make sure you can measure success. Track:

- Primary metrics: conversion rate, revenue, AOV

- Secondary metrics: bounce rate, time on page, error rate

- Guardrail metrics: refunds, support tickets, page speed

Add events for key steps, like view product, add to cart, start checkout, and complete checkout. Clear data makes clear choices.

Clean data, clean decisions

Clean data starts with naming rules. Use the same UTM tags for all traffic. Map goals the same way across pages. Use a tidy experiment name, like “2025-01-pricing-clarity.” This keeps your ab split test history easy to read and share.

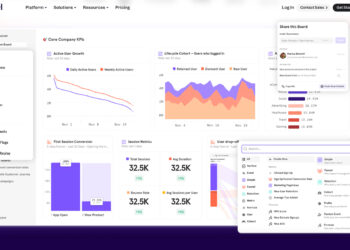

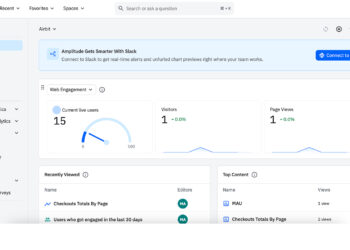

If you track product analytics, tools like Amplitude ab testing and mixpanel ab testing can help you dig into paths and cohorts. They show how groups behave, not just totals. That way, you can see who wins, and why.

Test design best practices

Keep variables tight

Change one main thing per variant. If you change headline, price, and images at the same time, you will not know what worked. Your ab split test should test one primary lever, with other parts held steady.

Plan sample size and run time

Give your test enough traffic and time. If you stop too soon, early noise can fool you. Plan a minimum run, often at least one to two full business cycles. This means you pass through weekdays and weekends. If your site is small, run longer. Do not peek and stop at the first sign of a lift. Let the ab split test finish so the result is solid.

Quality assurance before launch

Do QA before you go live. Check that both A and B load fast. Test on phones, tablets, and desktops. Try popular browsers. Make sure tracking fires on both versions. Fix any bugs before users see them. If you need help, try Ux testing or basic usability testing to catch confusion and errors early. A simple pass with ux testing tools also helps spot layout breaks and slow scripts.

AB split test ideas that raise sales

High-impact pages

Start where money changes hands:

- Product detail pages: clarify value, add trust badges, improve images

- Pricing pages: show simple plans and clear savings

- Cart: reduce clutter and show total cost early

- Checkout: fewer fields, more payment options, strong progress cues

- Post-purchase: add an order bump or simple cross-sell

Each idea can be a clean ab split test. For example, show or hide guest checkout and measure completes.

Messaging and offer optimization

Words sell. Try new headlines that speak to pain and gain. Test your value prop. Add risk reversal like free returns. Use social proof with reviews or counts. Test smart urgency, like limited stock if true. Make guarantees clear. Your ab split test can prove which message moves people to act.

UX friction fixes

Friction kills sales. Look for forms that are too long, fields with errors, or unclear labels. Try:

- Fewer form fields

- Inline help

- Clear shipping and tax info

- Trust badges near the pay button

When in doubt, call in ux design services to clean up flows, or ask a partner for ux audit services to spot hidden blockers. If deeper checks are needed, a full ux audit can map issues across your funnel.

Acquisition alignment

Make your ads and emails match the landing page. If an ad says “Free 2-day shipping,” the page should repeat it up top. Message match builds trust. This is a great ab split test: version A with a generic headline, version B with ad-to-page message match.

Audience targeting and segmentation

Test smarter by cohort

Not all users are the same. New visitors act differently than returning ones. Phone users have other needs than desktop users. Run an ab split test with segments in mind:

- New vs returning

- Device type

- Traffic source

- Geo region

- Intent level (viewed product vs home page)

You can learn that a change helps mobile but hurts desktop. Then you can ship it to the right group only.

Personalization vs ab split test

Personalization can help, but test it first. Start with an ab split test to prove lift. If a tailored banner for repeat buyers wins, then roll it out to that group. Keep a holdout group to guard against drift. This way, your custom content keeps earning its place.

Interpreting results (without traps)

Statistical vs practical significance

A result can be “significant” but still small. Look at both stats and the money. Ask: does this change move revenue or profit in a real way? Your ab split test should help the business, not just make a graph look nice. If a small lift adds up over big traffic, great. If it is tiny and costly to maintain, maybe skip it.

Guard against false wins

Watch out for traps:

- Sample ratio mismatch (A and B do not split as planned)

- Novelty effects (new things can spike then fade)

- Seasonality (holidays or sales events)

If you see odd jumps, extend the test. Re-run it if needed. Keep notes so the team learns and avoids repeat errors.

Roll out, learn, and scale

Deploy winning variants safely

Move from 10% of traffic to 50%, then to 100% if results hold. This staged rollout lowers risk. Keep a small holdout group for a time. Watch guardrail metrics like refunds and page speed. Your ab split test win is only a true win if it stays strong in the wild.

Build a durable experimentation program

Make a central library. Log the hypothesis, screenshots, dates, and results for every ab split test. Add templates for briefs and reports. Run monthly reviews. Ask “What did we learn?” and “What pattern do we see?” Over time, this library becomes gold. It guides new tests and saves you from old mistakes. If you need extra muscle, look into ux testing services to scale research alongside testing, and when you grow, you may decide to hire ui ux designers to speed up design and delivery.

Compliance, accessibility, and performance

Respect privacy and consent

Be kind with user data. Use cookie consent tools that are easy to read. Track only what you need. Make it simple to opt out. Trust builds long-term gains that beat any short-term trick. Keep your ab split test plans within your privacy rules.

Accessibility and speed

Fast pages help everyone. So do clear colors and good contrast. Test your pages with web accessibility testing so that more people can use your site with ease. When you check colors, try a Color Contrast Checker or a WCAG Contrast Checker to make sure text stands out. Need help picking a brand color that passes? A Color Picker From Image can match shades in your photos. Tools for Color Accessibility & Contrast make the job simple for busy teams. For bigger palettes, you can explore Palette Generators & Extractors, and if you use utility CSS, a Tailwind Colors Generator can speed up builds. Keep images small, code lean, and pages snappy. Better access plus better speed leads to better sales, and your next ab split test will show it.

AB split test FAQs

How long should an ab split test run?

Run long enough to cover a full business cycle. For many sites, that means at least two weeks so you capture weekdays and weekends. If traffic is high, you may reach clear results faster. If traffic is low, you may need more time. End only when the result is stable and your key metrics (like conversion rate and revenue) make sense. If in doubt, keep the test running a bit longer to be safe.

Can small sites run an ab split test effectively?

Yes. Keep changes big and clear to see bigger signals. Test on pages with higher traffic, like your main product page or top blog post with a lead form. Batch ideas and test one by one. Share learnings across pages. You can also borrow from broader research, like findings from ux audit services reports, or learn from industry studies. Use small tools and simple checks. Over time, even one small win per month can compound.

What if variants tie?

Ties happen. If A and B are close, try these steps:

- Segment the results by device or source to spot hidden wins

- Run the test longer to reduce noise

- Test a bigger change next time

- Stack two small wins into one clear variant

A tie still teaches you. It tells you that this lever is not strong. Move on to a bigger lever for your next ab split test.

Resources and templates

Ready-to-use assets

Speed up your work with simple tools:

- A one-page ab split test checklist so you never miss steps

- A hypothesis worksheet with fields for problem, change, impact, and metric

- A test report template with space for screenshots, numbers, and the final call

Save these in a shared folder. Update them as your team learns more.

Tooling notes

Set up an experiment tracking sheet. List status, owner, dates, traffic, and outcome. Add a dashboard for your core metrics. If your team needs to explore deeper paths, tools like Amplitude ab testing or mixpanel ab testing give strong cohort views. For expert review of flows, consider ux audit services as a periodic check-up, and keep a shortlist of ux testing tools to run quick checks before and after each launch.

Putting it all together with examples

Here is a simple flow you can follow this week:

- Pick one page: your top product page.

- Pick one problem: users leave before adding to cart.

- Draft a hypothesis: “A clearer headline and bigger CTA will raise adds to cart by 12%.”

- Build the variant: larger button, stronger verb, trust badge under it.

- QA on mobile and desktop.

- Launch the ab split test for two weeks.

- Watch core metrics. Do not stop early.

- Choose the winner, roll it out in stages, and log the learning.

Now try the same pattern on pricing. Try showing the most popular plan with a helpful note. Then test clarity on fees at checkout. Keep each ab split test focused. Keep notes. Over a few cycles, you will have a stronger funnel end to end.

Extra tips for smoother testing days

- Name tests in a clear way so future you can find them fast

- Re-use blocks, banners, and components to ship fast

- Keep a weekly review where you pick, build, and ship the next test

- Share wins with the whole team so energy stays high

As your program grows, your needs may change. You might bring in ux design services for page redesigns that still feed into testing. When bigger questions come up, schedule ux testing services sessions with real users. Before major sprints, plan a light round of ux audit to catch issues early. If your team lacks capacity, consider a short-term partner or plan to hire ui ux designers who can build and test faster with your product team.

Start your next test now

You now have a simple, strong playbook. Pick one page. Pick one problem. Draft one clear idea. Launch an ab split test this week. Keep your focus on money metrics and user joy. Use clean data and tidy notes. Ship winners in safe steps. Need help with deeper checks, color contrast, or flows? Bring in tools, audits, and smart partners when needed. Your next ab split test can raise sales. Start today, learn fast, and keep winning.